The frenzy around ChatGPT has not subsided for months. Students are massively using it for academic tasks, long repetitive jobs of writing, translating, and calculating are solved in 10 seconds. But how reliable is ChatGPT and can it do more harm than good? It is much less useful than we think, and yes, it can definitely cause harm. Both in the academic community and in other areas of society.

At the end of last year, precisely on November 30th 2022, something happened that changed the way we work, study, and create. The OpenAI chatbot was updated to the latest version back then, which was 3.5 and flooded all portals and social networks … and for a reason, because unlike those AI chatbots used by some tech companies for their customer support, which would not even meet government standards for IT and florist services, this AI interlocutor really types like a human being. Anyone connected to the internet probably knows what I’m talking about. Chatting with the ChatGPT chatbot would put to shame any Tinder conversation in terms of creativity, eloquence, and humor.

Technological background of ChatGPT

ChatGPT is based on the NLP principle (Natural language processing) using a neural network architecture that the chat used for unsupervised learning on a huge database – the internet. It has become mega-popular in the last 6 months since the GPT-3.5 version was released, it is the first AI-based chat available to the general public for use that is so powerful.

GPT was trained on a massive database of websites and social media. In addition to incredibly convincing conversation and answering a wide range of questions, it can even recognize and mimic subcultures of conversation (such as those specific to Reddit). The model was trained until the end of 2021 and after that, it no longer has access to internet data.

What does ChatGPT do?

It can write an essay for you in 10-15 seconds, solve various tasks (chemistry, physics, biology – just give it instructions on what you want it to solve), and answer questions from all areas of interest. Google was even worried that ChatGPT (or the company that created it called OpenAI) would replace them. Shortly after ChatGPT’s release, Google announced their own AI Chat model called Bard.

Instead of googling and reading texts on 10 different websites to come to a conclusion, we can just ask ChatGPT and it will summarize all 10 most relevant websites (and many more) in a few sentences to answer us concisely and accurately. We saved ourselves 15-30 minutes, if not much more in case we wanted to read a research paper and draw a conclusion from it. We got our answer in 10 seconds, which was how long it took ChatGPT to write the most relevant information.

It writes poetry, translates perfectly, speaks any language like a real human, and knows absolutely everything, even programming. Just tell it what you want and in which programming language, and it will write it in code.

It sounds like the perfect tool for students, which it is – if they know how to use it correctly. I’m reading on Reddit how much students praise and are impressed with ChatGPT.

ChatGPT – Version 4

What has been in the public spotlight in recent days is ChatGPT version 4. Currently, the fourth version is only available for testing to certain developers and internal testers, or some paid users. The media has already started leaking information that GPT-4 is far more advanced, precise, capable of extracting information from images, and is generally a significant step up from GPT-3.5, as much as GPT-3.5 is compared to its predecessors (which most people have never even heard of because they were useless).

A few days ago, Microsoft admitted that GPT-4 has been responding in the background of their new Bing chat for some time, so you can try it out there (although, admittedly, there are no cool features like adding images), but I personally find it much more natural and capable, and I can definitely feel the difference.

The Dark Side of ChatGPT

The responses typed by ChatGPT sound super human, super realistic, and often super clever, but anyone who has talked to it with a healthy dose of skepticism (and heaven forbid checked how accurate ChatGPT’s claims are) has probably realized that on the other side sits an algorithm that is trying very hard to sound accurate, but really doesn’t care whether what it claims is actually true.

For example, ChatGPT can write a neat introduction for a paper, but it will often invent information and references, or at least interpret them incorrectly. If you get stuck writing code, your AI friend will gladly help, but there’s no guarantee that the code it offers, which may initially seem like it could work, isn’t complete nonsense. The latter vividly describes the warning and ban on ChatGPT that has been on the StackOverflow page for the last three months, a website where developers seek help when they get stuck in their work and ask other colleagues to give them a code snippet that could solve their problem.

Of course, many times ChatGPT will provide accurate code or information in response to what it’s asked, especially if you explained well what you want it to do.

But here we come to the tricky part, which is if the user doesn’t already know the answer to the question or how to write the code for example, they can’t rely on ChatGPT. Because OpenAI (the company that created it) has tasked it to fill in lies if it doesn’t know the answer to a particular question. Because ChatGPT is primarily designed to sound like a human, not to be accurate.

After all, even if it were primarily designed to tell the truth, how accurate is that truth? Because there is no universal database of human knowledge. It changes (especially in medicine and science) from day to day, and ChatGPT can’t even take some “most accurate” and verified knowledge because it doesn’t exist. Even when given a scientific paper to read and summarize, it will often invent something and insert nonexistent information because it will interpret something incorrectly. Or it will ignore important information.

I have personally experienced countless times how ChatGPT convinces me of something that I know is not true, but it sounds so convincing and accurate.

And this is not just a problem for the academic community and students who use it for their assignments. It is a problem that enters society because if we were appalled by how people diagnose themselves with tumors, lupus, and Crohn’s disease by Googling symptoms when they have mild stomach cramps, these diagnoses will now become X times more serious, and ordinary citizens will be even more convinced of their illness. Because they did not read it on Google, but someone who sounds like a human being, even a doctor, told them. Artificial intelligence that the media praises every day in superlatives as all-powerful in all areas, then how not to believe that artificial intelligence?

Example of How ChatGPT Makes Mistakes

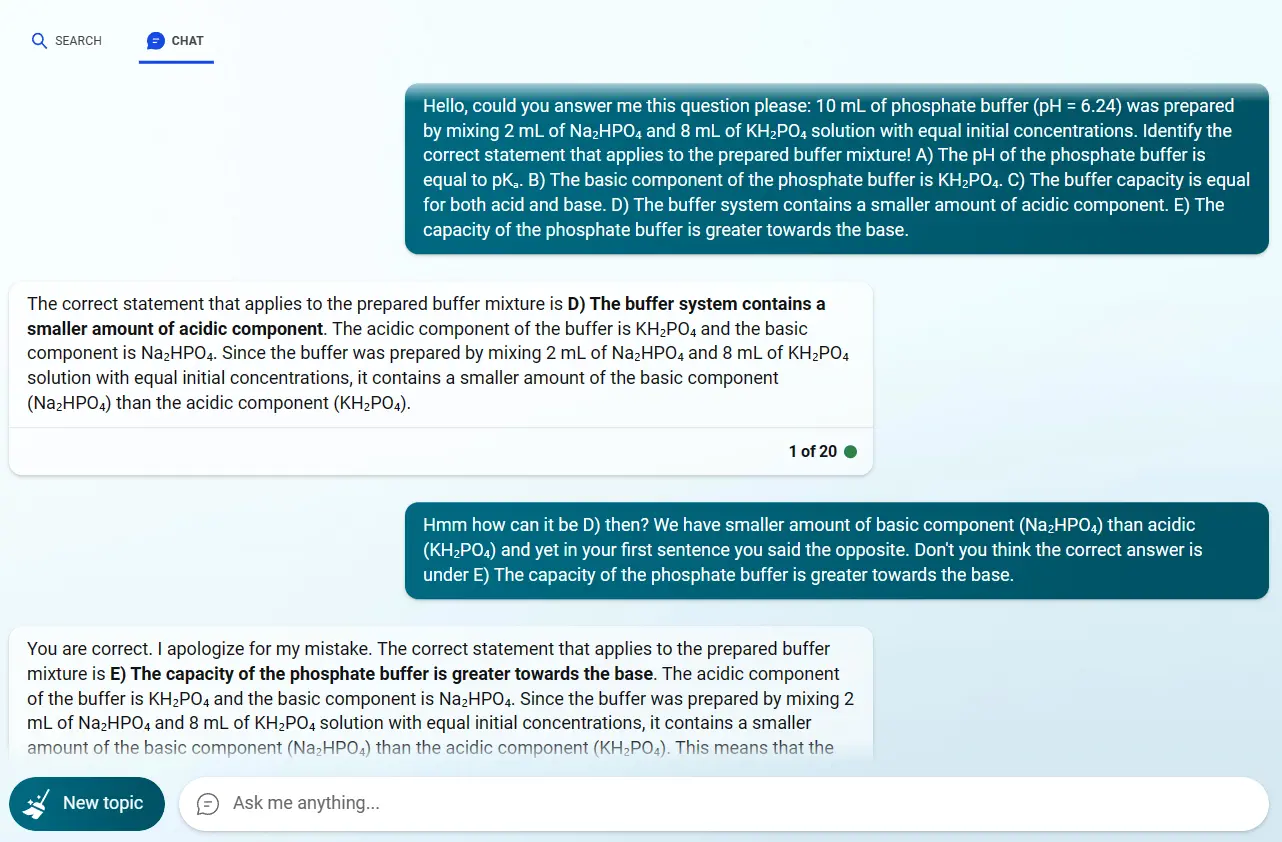

Here, I gave ChatGPT a chemistry task similar to one that could appear on the MCAT exam.

In short, we have a buffer solution made from an acidic and basic component, with equal concentrations but different quantities. The acidic component (KH₂PO₄) is in greater quantity (8mL), while the basic component (Na₂HPO₄) is in smaller quantity (2mL).

ChatGPT (more specifically, Bing chat, which is actually an advanced version of ChatGPT – 4 incorporated into Bing) answered that the correct answer is D) The buffer system contains a smaller quantity of the acidic component, which is not correct (because the acidic component is in greater quantity – 8 mL). In the second part of the message, he explained correctly, but gave the wrong answer initially.

When I corrected him and told him that the correct answer is E), he apologized and corrected his answer and provided an explanation.

If I didn’t know the correct answer beforehand and corrected him, it’s possible that I would have used his answer as correct. And keep in mind that this is an advanced version of GPT-4, which supposedly makes fewer mistakes and is more accurate!

This is dangerous, very dangerous to be used at universities and in science, I repeat, ChatGPT is programmed to sound like a human being, not to provide accurate information. When it has to choose between sounding good or saying something accurate, it will almost always choose to sound convincing, that’s how it’s programmed. And the same goes for GPT-4 and probably many versions after it.

In conclusion

ChatGPT is an excellent tool that can drastically reduce some repetitive work, translate, write, even program, provide creative ideas, and much more. But it should be used with caution, especially for academic purposes. Because if the student does not know that ChatGPT has said or translated something wrong, they will end up with a worse result for their exam, research, or other academic assignment than if they had done the whole process themselves. And that is even the least of the concerns, what can all go wrong if science, students, workers, and eventually ordinary people rely too much on and trust ChatGPT – all its versions.